As industry began a widespread proliferation of programmable logic controllers (PLCs) and supervisory control and data acquisition systems (SCADA), one of the positive features offered was remote data collection and monitoring. Few would argue that the transition to modern-day centralized management has had a major impact on improving productivity. However, some issues remain.

From an operator perspective, in the early days of PLCs and SCADA systems, much of the industry was not ready to deal with programming and operating computer-based controls, which were far more complicated than the existing stand-alone controls they replaced. This was further aggravated by the proprietary nature of early products, which also lacked robust remote communication capability. Many of us remember the days when much of remote communication was provided by phone modems, which were both slow and unreliable. As the technology matured, customer demands brought forth non-proprietary protocols and high-speed Ethernet communications.

Here we are decades later and yet there is still a sense that we have more to do to take full advantage of the systems that operate our plants and buildings. Many operators look upon their systems as more of a complicated hindrance than a help. In commercial buildings in particular, few owners do much of any data collection because the process is often tedious and un-rewarding.

The fact is that even with the most functional dashboard or SCADA system, the vast majority of the data does not reveal much. It is the proverbial needle in the haystack. As a result, in that rare instance when something interesting does appear in the data, it often gets overlooked.

Moreover, the types of alarms most systems provide are like snapshots using instantaneous values to trigger an event. SCADA providers have spent countless hours developing glitzy graphics for alarms, and there is a case to be made that operators know when the production lines go down or angry tenants are too warm and can respond much sooner than they could have without such alarms. The problem is, often times the operator is still left wondering whether the core issue is a broken belt on the line or a window left open, a mechanical failure, or a bad sensor. In many cases, it still takes too much time to diagnose and resolve issues. It is reminiscent of the infamous oil light on older model cars—by the time you saw it, the engine was already damaged.

RELATED: When Industrial Maintenance Makes Sense

The Experiment

In 2007 I began experimenting by getting raw data from computerized control systems in commercial buildings and running mathematics to determine faults. The concept was to get as much value as possible by extracting from existing sensors and without adding any additional cost. The University of Texas defined this as Automated Fault Detection and Diagnostics, or for those who prefer acronyms, AFDD. Overcoming a very steep learning curve we had our software up and running in 2007 and used it on several thousand sites. Through this remarkable experiment, I was able to make some very interesting and somewhat surprising observations that I would like to share.

One of the most interesting observations was related to equipment catastrophic failures. Armed with limited statistical information from the most experienced equipment insurer I know, Hartford Steam Boiler, I was convinced that I could reduce catastrophic failures by roughly 30 percent. To a large part my estimate was based on an article published in Hartford Steam Boiler’s Locomotive magazine back in 1980 by Raymond F. Stevens. Mr. Stevens presented statistics on eight years of data representing some 15,760 failures of air conditioning and refrigeration equipment. For example, back in 1980, owners could expect a motor failure on a centrifugal compressor after 10 years and a major mechanical failure in 15 years. Large reciprocating compressors for air conditioning had a life span of 10 years, and for refrigeration compressors it was eight years.

More important were the causes of failures. For example, 84 percent of motor failures in hermetic motors were attributed to winding failure. Even in those days, motor manufacturers proclaimed that all winding material was designed to operate literally indefinitely as long as the motor temperature remained at or below its design temperature condition. The caveat was if the motor winding temperature reached just 10 degrees Centigrade above that design condition, the winding life would be decreased by approximately 50 percent. That is a pretty revealing statement; the takeaway being that abuse is far more destructive than age. It leaves one to consider what the actual useful life of equipment could be if abuse never happens. Based on the statistics presented by Mr. Stevens, a 30 percent reduction of failures seemed reasonable.

Unexpected Results

As it turned out, I wasn’t even close. The test came early as one of my first customers was experiencing what seemed to be unreasonably high compressor failures on their roof top air conditioners. After a postmortem examination of the charred remains of at least one dead compressor, the manufacturer came back with a suggestion that lightning was the likely culprit. This tends to invoke the old joke about how hurricanes and tornadoes seek out trailer parks—apparently lightning does the same for compressors.

Turn the clock forward four years with the deployment of monitoring using automated fault detection and diagnostics, and compressor failures fell to zero…as in none…nadda. Based on all known statistics, as these systems continued to age, things should have gotten exponentially worse, but instead they never broke.

Admittedly, thousands of pieces of equipment I monitored over the years were a mere statistical microcosm in numbers compared to all of the equipment that exists in the world, but zero failures were totally unexpected. It brings to mind an old adage in the air conditioning business that says “compressors never die…they are murdered.”

Some technology companies have been using remote diagnostics on various types of equipment for a few years now. Even though there is substantial difference in the type of the equipment monitored and the applications it is used for, the same benefits and challenges are consistent. The fact is, if something is designed correctly in the first place and never gets abused, no one really knows how long it will last, but I’m certain it is far longer than most would imagine.

The Sensor Dilemma

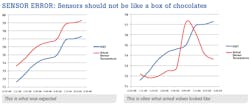

Another very interesting observation was the lack of sensor accuracy. Any veteran of the industry would expect to find a certain percentage of sensors not reading properly in any type of application. What was a surprise was the degree and quantity of sensor inaccuracy. Because such a large percentage of the data received from sensors was so profoundly off, special rules had to be incorporated to not alert users unless the values were off by a substantial amount. This was a matter of practicality due to the overwhelming amount of anomalies our software would have generated. So many in fact I deemed the problem unmanageable otherwise. I resigned myself to the reality that most sensors, specifically in commercial buildings, simply were not capable of what most laymen would consider acceptable accuracy. This would be less expected in industrial applications, however, two interesting observations I have made over the years are that often industrial customers do not check the validity of their sensors, or they have a tendency to ignore what they are telling them and assume something else is the issue.

Out of the Box Thinking

As revealing as these observations were, they were not earth shattering in themselves. For years, operators have argued for bigger budgets to hire more staff. The eternal argument is more prevention, less failure, and less failure means less money. There have probably been thousands of articles written expounding the financial virtues of reducing catastrophic failures, saving energy, and getting great returns by investing more in preventive maintenance programs—I respectfully disagree.

For several decades, all types of businesses have been continually increasing productivity by maximizing technology. My observations while performing automated fault detection left little doubt that in the case of preventive maintenance, most owners have not even come close to the productivity possible. Based on the data collected, I am convinced that a typical company can conservatively achieve at minimum 33 percent increase in maintenance productivity, reduce catastrophic failures by at least 90 percent, and reduce energy costs by at least 12 percent. Arguably best of all, they’ll also never see another alarm from their computerized control system. All this can be achieved by maximizing the technology that most already have and by adding a few new wrinkles.

The lesson learned from diagnostics was that failures almost never happen all of the sudden, rather they almost always occur slowly over time. The research showed that by monitoring gradual changes over time and acting at exactly the right time, failures could be eliminated and equipment would run at peak efficiency.

As wonderful as this discovery was, it began to occur to me that none of my customers were truly taking full advantage of my software. As we were directing maintenance activities at exactly the right time, the customers still went about their normal routines of prevention even though there was no indication it was needed. Ironically, many times soon after performing routine prevention on a piece of equipment, they would get an indication from the software that something else needed attention on the same piece of equipment. To be fair, every customer had a gaping hole of some sort where they lacked the sensors needed to fully automate their maintenance calls, but I determined that filling those holes was more than cost justified.

In trying to somewhat overcome this problem, I came up with clever ways to substitute. For example, since many air conditioning functions required knowledge of outdoor temperature and wet bulb, if the customer did not have those sensors, I substituted National Weather data. The experience was extremely valuable because I became very astute at understanding which sensors were really important, and which could be eliminated without any sacrifice in maximizing the value that could be obtained. The last divide that is left to overcome is the problem of sensor accuracy.

You’re Bad and You Know It

The interesting thing I learned about some of the sensors issues encountered was the way they presented themselves. One would think that if a flow sensor was reading incorrectly, it would show up consistently as reading too high or too low. Not so. More often than not, faulty sensors would be all over the place. They would read too high in the lower range and too low in the upper range, or vice versa. Sometimes this was due to the poor quality of the analog to digital A/D conversion process in the computerized control system. If you are unfamiliar, most systems take an analog value such as a small voltage or amperage that varies based on the condition being sensed and converts it to the ones and zeros a computer can understand. Some manufacturers have low-resolution conversion, which means they can’t read very small changes, while others simply use poor components. This is far truer in commercial applications than industrial. We concluded that since few people in commercial applications were collecting and analyzing the values, the manufacturers responded by giving them less expensive stuff.

There’s Gold, Just Don’t Try to Pan for It

I am totally convinced that there is value in the data that can be collected from computerized systems. If you think about a typical application, there can be literally thousands of data points that can be collected, all of which have some value with some data points having more value than others. Doing some simple math if the system has say 500 data points, including all inputs, outputs, set-points, schedules, etc., and the average collection time is say 15 minutes, every day you will collect 48,000 data points.

If you have multiple sites, it gets even more interesting. Now take these 48,000 data points, import them into a SCADA and mix and match the right data points together in graphs to see if there is an anomaly. A large part of the visualization is to determine whether the sensors are even close to accurate. Furthermore, different functions will likely include data points that were used in another function. Although it might be possible to look at every combination in less than a day, the value would likely not be high enough to justify the time it takes to perform the task. Alternative two is to use advanced algorithms to do the job automatically. Even then, using today’s high-speed processors, running that many data points through algorithms can be time consuming. Add to that, the cost of storing and maintaining all of that information for long periods, and suddenly your budget gets fairly significant. Lastly, literally every day, one can come up with new algorithms that add even more value as some combination of sensors were determined to have a relationship that showed an anomaly. Point is, it isn’t very productive or useful to try and do this with conventional technology. When people talk about big data technology, it is literally the difference between panning for gold and using heavy machinery.

Google to the Rescue

Within the last couple of years, the good folks at companies like Google and Amazon, whose businesses revolve around handling massive data, invented something called cloud computing. The basic principal is to take a massive amount of data and algorithms and break everything into small chunks digested by literally thousands of processors as needed literally at the speed of light. The reason why this is a big idea when it comes to maintenance is that it eliminates the time to process literally millions of data points down to seconds. Using cloud computing, data collection, sorting and functions can be fully automated to immediately produce results in the form of work orders in near real time. Furthermore, algorithms can be added ad hoc as more functions are developed with very little effort. Lastly, budgets are simplified through a minimal monthly service fee, which as the industry continues to scale and grow should eventually become fairly trivial.

The Savings We Are Missing

When you think about it, the way we perform maintenance is incredibly inefficient. When one looks at the way preventive maintenance programs are initiated regardless of whether there is a computerized maintenance management systems (CMMS) or not, the frequency of maintenance tasks such as greasing motors or changing filters are at best an educated guess. There’s almost no chance that maintenance is performed precisely as it should be. With certainty, we know that the frequency of performing preventative maintenance is either too little or too much. Regardless of which is true in any given case, it carries a heavy cost penalty. Simply put, we need to combine the technology of sensors, cloud computing, and algorithms to tell us exactly when something needs attention. It just makes sense that the next logical evolution in maintenance services is the adoption of “Just in Time” service. Imagine a facility that requires 30 percent fewer people, saves an average of 12 percent more energy, and reduces failures by 90 percent. We are not suggesting this as merely a leap into better efficiency, but more of a survival tactic as the industry will continue to struggle finding an adequate supply of qualified technicians. Excluding the benefit of far fewer failures, the estimate of simple return on investment today is easily within six months. Simply put, six months of savings should easily pay for one year of monitoring.

The Last Elephant in the Room

OK, so this is all well and good. However, it isn’t as though maintenance people have not at one time or another attempted to implement sensors to notify when appropriate maintenance should be performed. Perhaps the best example is the infamous attempt to monitor when to change air filters on an air-handling unit. The usual suspect of sensors is either inexpensive paddle or differential-pressure switches. Because of their reliability and/or lack of accuracy, operators found themselves inundated with false alarms. Instead of increasing efficiency, the opposite occurred in what could best be described as a “chase your tail” effect.

Based on our findings, it is apparent that better sensors are needed for all types of applications. As the old saying goes, garbage in equals garbage out. In the past, regardless of how much one invested in quality sensors, eventually they will drift. Here is the good news—there are companies today that are developing self-calibrating sensors. The goal is that sensors you install today will remain accurate for at least 10 years. Sound implausible? With today’s powerful microprocessors, the cost to develop maintenance-free sensors is right around the corner. The best news is that not only will owners be assured of always getting the correct reading, the payback of not having drift and constantly re-commissioning sensors will easily justify the added cost.

John Pitcher is the CEO of Weber Sensors, a 50-year-old German manufacturer of flow products based on the calorimetric principle of operation. Previously he was the founder of Scientific Conservation, which was one of the first companies to use cloud computing for fault detection and diagnostics. Mr. Pitcher’s 40-year career covers many product development and leadership roles in the automation and energy efficiency fields. He can be reached at 770 592-6630 or john.pitcher@captor.com.