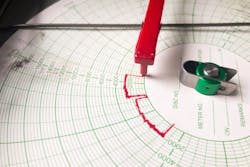

Forty years ago, I was working at Amoco Oil, and at that time, electronic flow measurement (EFM) — measuring how much oil and gas flows through a pipeline — was calculated with round paper chart recorders.

A pin would chart the pressure and temperature over the course of a month, and then someone would physically collect the round charts so engineers could integrate the temperature and pressure with a calculator to determine volume (as shown in Figure 1). Engineers had hundreds of these paper charts lying around as they tried to make sense of the EFM data.

Sometime in the early 1980s, the technology was developed to measure the data electronically and integrate it on a computer. Then, the American Petroleum Institute (API) released a standard that stated, if you are going to measure oil and gas and then sell it, the measurement must be accurate.

There was a rush to automate EFM for better measurement, and millions of flow computers were installed at companies worldwide. Over the next 30 years, there were about a dozen different manufacturers and a dozen protocols created for EFM with even more customer-specific, proprietary solutions.

The challenges with varied EFM data types

The primary concern for EFM today is that most of the existing networks do not have the bandwidth to meet all of the demands for data. Companies are making decisions they should not have to — they have to decide what data to leave stranded since they cannot get to it all. They are sacrificing data availability due to bandwidth.

The reason networks do not have enough bandwidth is because EFM protocols are poll/response. On the network, the user sends out a poll, waits for the flow computer to assemble a response, the response comes back, and then they move to the next flow computer. Poll/response protocols have limits because you cannot poll fast enough on the network, and a lot of valuable data is left stranded in the field.

The industry is abuzz with artificial intelligence, process optimization, increasing production and more. All of these activities mean multiple data consumers, more strain on bandwidth and more siloed data sets.

The good news is that in order to enable these new technologies, companies do not need to re-instrument their entire system. All of the equipment out there is doing the job well; we just need to get better access to the data.

MQTT solves EFM challenges

If we forget about the past 30 years of EFM technology, eliminate any preconceived notions, and invent something new today, how would we want it to work? We would want a flow computer plugged into a modern TCP/IP network. We would want to connect, authenticate and then find everything we want to know. It should be that simple.

Fortunately, there is a way to do that efficiently, using all of the advantages of the underlying TCP/IP networks we have today. It turns out, we do not need to use poll/response.

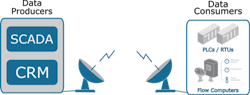

I co-invented MQ Telemetry Transport (MQTT) in 1999 with Dr. Andy Stanford-Clark of IBM as an open standard for running a pipeline. The project was for Phillips 66, and they wanted to use VSAT (Very Small Aperture Terminal) communications more efficiently for their real-time, mission-critical SCADA system. Multiple data consumers wanted access to the real-time information (see Figure 2).

MQTT is a publish-subscribe, extremely simple and lightweight messaging protocol. It is designed for constrained devices and low-bandwidth, high-latency or unreliable networks. MQTT minimizes network bandwidth and device resource requirements while attempting to ensure reliability and some degree of assurance of delivery. MQTT is based squarely on top of TCP/IP so we use those standards for best-in-class security.

Replacing a poll/response EFM network with an MQTT-based network saves 80% to 95% of bandwidth. That means stranded data can be rescued.

MQTT also allows for multiple data consumers. You can publish the data from an EFM device, and multiple applications can consume it — all at the same time. MQTT allows for a single source of truth for data, and that data is standard and open source so anyone can use it.

MQTT is a widely used messaging protocol for an IoT solution, but it is time for the oil and gas industry to catch up for EFM. We do not need to prove that MQTT has become a dominant IoT transport — people are using it because it is simple and efficient, and it runs on a small footprint. You can publish any data that you want on any topic.

We recently created a specification within the Eclipse Tahu project called Sparkplug that defines how to use MQTT in a mission-critical, real-time environment. Sparkplug defines a standard MQTT topic namespace, payload and session state management for industrial applications while meeting the requirements of real-time SCADA implementations. Sparkplug is a great starting point for how to use MQTT in EFM.

The misnomer in the industry is that modernizing EFM requires a large financial investment along with a lot of time and effort. MQTT is open-source, and it can be implemented on existing legacy equipment.

Arlen Nipper is the president and CTO of Cirrus Link and has over 42 years of experience in the SCADA industry. He was one of the early architects of pervasive computing and the internet of things (IoT) and co-invented MQTT, a publish-subscribe network protocol that has become the dominant messaging standard in IoT. Nipper holds a bachelor’s degree in electrical and electronics engineering (BSEE) from Oklahoma State University. He can be reached at [email protected].

Arlen Nipper

Arlen Nipper is the president and CTO of Cirrus Link and has over 42 years of experience in the SCADA industry. He was one of the early architects of pervasive computing and the internet of things (IoT) and co-invented MQTT, a publish-subscribe network protocol that has become the dominant messaging standard in IoT. Nipper holds a bachelor’s degree in electrical and electronics engineering (BSEE) from Oklahoma State University. He can be reached at [email protected].